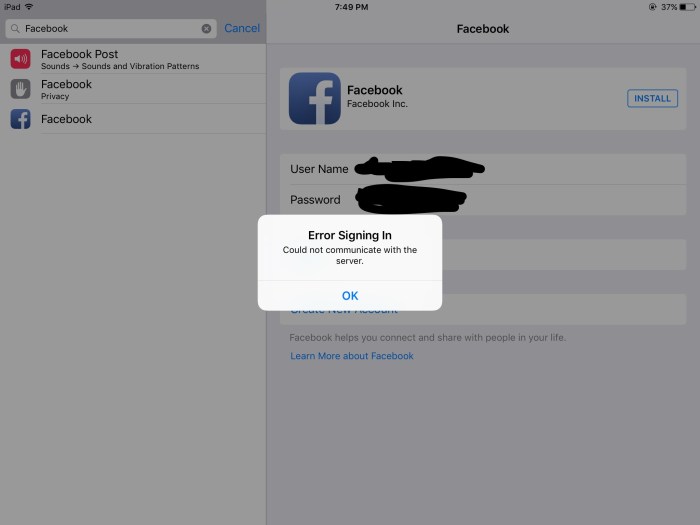

Facebook outage server configuration: Ever wondered what happens behind the scenes when Facebook goes down? It’s not just a simple “oops,” it’s a complex web of interconnected servers, intricate configurations, and a whole lot of engineering magic (or sometimes, mishaps). This deep dive explores the architecture of Facebook’s server infrastructure, the critical role of configuration in preventing outages, and the impact of various configurations on downtime. We’ll unravel the mysteries of redundancy, load balancing, and the crucial role of network and software configurations in maintaining the smooth flow of billions of daily interactions.

From the sprawling data centers humming with powerful servers to the intricate software that keeps everything running, we’ll uncover the intricacies that determine whether your newsfeed loads instantly or leaves you staring at a blank screen. We’ll dissect real-world scenarios, highlighting common configuration mistakes and best practices to ensure digital resilience. Get ready to understand the tech behind those frustrating moments when Facebook disappears – and how engineers work tirelessly to bring it back.

Facebook’s Server Infrastructure: Facebook Outage Server Configuration

Keeping a billion-plus users connected requires a seriously robust infrastructure. Facebook’s server setup isn’t your average web hosting; it’s a sprawling, highly engineered system designed for incredible scalability and resilience. Think of it as a digital city, constantly adapting and evolving to handle massive traffic surges and unexpected events.

Facebook’s Server Architecture

Facebook’s infrastructure is built on a massive scale, utilizing a distributed architecture across numerous data centers globally. This architecture leverages a combination of custom-built hardware and open-source software, ensuring flexibility and efficiency. The core components include a vast network of servers, load balancers distributing traffic, content delivery networks (CDNs) caching data closer to users, and sophisticated monitoring and management systems. All of this works together like a finely-tuned machine to serve billions of requests every day.

Server Types and Roles

The heart of Facebook’s operation beats with a diverse range of servers, each playing a crucial role. Web servers handle user requests, displaying newsfeeds and content. Database servers store the massive amounts of user data, posts, and interactions. Cache servers store frequently accessed data to speed up responses. Application servers run the complex software powering Facebook’s features. Finally, specialized servers handle tasks like image processing, video streaming, and search. This division of labor optimizes performance and allows for independent scaling of different components.

Redundancy and Failover Mechanisms

Outages are the enemy of a social media giant. Facebook combats this threat through extensive redundancy and failover mechanisms. Data is replicated across multiple data centers, ensuring availability even if one location fails. Load balancers distribute traffic across multiple servers, preventing overload on any single machine. Automated systems constantly monitor the health of the infrastructure, automatically rerouting traffic if a server or data center goes down. This layered approach ensures that even significant failures have minimal impact on user experience – a crucial element for a platform as popular as Facebook.

Hierarchical Structure of Facebook’s Servers

The sheer scale of Facebook’s infrastructure necessitates a hierarchical organization of its servers. This structure allows for efficient management and resource allocation.

| Tier | Description | Server Types | Location |

|---|---|---|---|

| Edge Servers | Closest to users; serve static content via CDN. | Cache servers, CDN servers | Globally distributed |

| Regional Data Centers | Handle regional traffic and dynamic content. | Web servers, application servers, database servers | Multiple regions worldwide |

| Core Data Centers | Centralized hubs for critical services and data storage. | Database servers, core application servers | Strategic locations |

| Global Load Balancers | Distribute traffic across all tiers. | Specialized load balancing servers | Multiple locations |

Impact of Server Configuration on Outage Duration

A Facebook outage isn’t just a minor inconvenience; it’s a global event impacting billions. The speed and efficiency of recovery depend heavily on the underlying server infrastructure and its configuration. A well-designed and resilient system can minimize downtime, while a poorly configured one can extend outages significantly, leading to massive financial losses and reputational damage. Understanding the impact of server configuration on outage duration is crucial for any large-scale online service.

Different server configurations directly influence how quickly a service can be restored after a failure. Factors like redundancy, load balancing, and automated failover mechanisms play a critical role. A system with robust redundancy, for example, can seamlessly switch to backup servers in case of a primary server failure, minimizing disruption. Conversely, a system lacking such redundancy will experience extended downtime as engineers manually work to restore service.

Recovery Time Comparison Across Server Configurations

The recovery time for a server outage varies drastically depending on the chosen configuration. Consider a hypothetical scenario: Imagine Facebook experiences a massive surge in traffic, overwhelming its primary servers.

| Server Configuration | Redundancy | Load Balancing | Estimated Recovery Time |

|---|---|---|---|

| Basic Configuration (No Redundancy) | None | None | 6-12 hours (or longer) |

| Redundant Configuration (Active-Passive) | Active-Passive servers | Basic load balancing | 30-60 minutes |

| Highly Redundant Configuration (Active-Active) | Multiple active servers | Advanced load balancing, geo-redundancy | 5-15 minutes |

| Advanced Configuration (Automated Failover) | Multiple active-active clusters, geographically distributed | Sophisticated load balancing, automated failover | <5 minutes (near-instantaneous) |

The table above illustrates how different configurations translate into varying recovery times. A basic configuration, lacking redundancy and load balancing, requires extensive manual intervention, leading to prolonged outages. In contrast, a highly redundant system with automated failover can minimize downtime to a matter of minutes, ensuring business continuity and user satisfaction.

Hypothetical Scenario: Impact of Server Configuration on Outage Resolution, Facebook outage server configuration

Let’s imagine a hypothetical scenario where a major software bug causes widespread server crashes across Facebook’s infrastructure. In a system with minimal redundancy, engineers would need to identify the root cause of the bug, manually deploy a fix to each affected server, and then restart those servers individually. This process could easily take several hours, resulting in a significant outage. However, a system with robust redundancy and automated failover mechanisms could quickly switch traffic to unaffected servers while the bug is being addressed. The automated deployment of the fix to the redundant servers would be much faster, minimizing user impact. The difference in outage duration could be the difference between a few hours of downtime and a near-instantaneous recovery. This highlights the crucial role of proactive server configuration in mitigating the impact of unforeseen events.

Software and Application Configuration

Software and application configurations are the unsung heroes (or villains, depending on how well they’re managed) of internet stability. A seemingly minor tweak in a config file can cascade into a massive outage, while robust configuration management can prevent even the most ambitious hacker from bringing down the house. Think of it as the intricate wiring of a skyscraper: one loose wire can cause a blackout, but proper maintenance keeps everything humming along.

Proper software and application configuration plays a crucial role in preventing outages by ensuring that all systems are working together harmoniously and securely. This includes everything from database settings to API keys and load balancing parameters. A misconfigured server, a flawed application setting, or a missing security patch can easily lead to downtime, data loss, or security breaches.

Software Vulnerabilities and Outages

Software vulnerabilities are weaknesses in the code that malicious actors can exploit to gain unauthorized access or disrupt services. These vulnerabilities can range from simple coding errors to complex design flaws. For example, a buffer overflow vulnerability can allow an attacker to inject malicious code into a system, potentially causing a crash or denial-of-service attack. Similarly, SQL injection flaws can allow attackers to manipulate database queries, leading to data breaches or system instability. The infamous Heartbleed vulnerability, a bug in OpenSSL, is a prime example; it affected millions of websites and services, exposing sensitive user data. Another example is the Shellshock vulnerability, which allowed attackers to execute arbitrary code on vulnerable systems, potentially leading to widespread outages.

Best Practices for Software Management and Updates

Effective software management is paramount to minimizing downtime. This includes a robust patching strategy, regularly scheduled updates, and rigorous testing before deploying changes to production environments. Imagine a construction crew always using the latest tools and safety protocols – that’s the level of preparedness needed for software. A crucial aspect is thorough testing in staging environments before deploying updates to live systems. This allows for identification and resolution of potential issues without impacting users. Using automated deployment tools can streamline the process and reduce human error. Regular security audits, vulnerability scanning, and penetration testing can proactively identify and address potential weaknesses before they are exploited.

Version Control and Outage Mitigation

Version control systems, such as Git, are indispensable for managing software changes and mitigating outage risks. They provide a detailed history of all code modifications, allowing developers to quickly revert to previous stable versions in case of problems. Think of it as a meticulous record of every change made to a building’s blueprints – easy to track and revert if needed. This allows for quick rollback in case of faulty deployments, minimizing the impact of errors. Branching strategies, such as using separate development and testing branches, further isolate risky changes and prevent them from affecting live systems. The ability to easily revert to a known good state is a critical component of minimizing outage duration and impact.

Monitoring and Alerting Systems

Imagine a colossal machine, Facebook’s server infrastructure, humming with activity. Millions of users rely on its seamless operation. A single glitch can trigger a domino effect, causing widespread disruption. This is where robust monitoring and alerting systems become absolutely critical – the early warning system preventing a full-blown outage. They are the silent guardians, constantly vigilant, ensuring the smooth functioning of the digital world.

Real-time monitoring is the backbone of any effective outage response strategy. It allows for immediate detection of anomalies, enabling swift intervention before minor issues escalate into major disruptions. Think of it as a doctor constantly checking a patient’s vital signs – any deviation from the norm triggers an immediate response. The faster the problem is identified, the faster it can be resolved, minimizing downtime and user frustration.

Types of Monitored Metrics

Server health is assessed through a comprehensive suite of metrics. These metrics provide a holistic view of the system’s performance, identifying potential bottlenecks and areas of concern. For example, CPU utilization, memory usage, disk I/O, network traffic, and application response times are continuously monitored. Anomalies in any of these areas can signal impending trouble. Visual representations, such as graphs and dashboards, provide at-a-glance insights into the system’s overall health, making it easier to spot potential problems. Imagine a dashboard displaying real-time graphs showing CPU usage spiking – that’s a clear indication something needs attention.

Alert Generation Mechanisms

When critical thresholds are breached, automated alerting mechanisms are triggered. These systems use various methods to notify the relevant teams. Email alerts are a common approach, but more sophisticated systems might use SMS messages, instant messaging platforms like Slack, or even automated phone calls for critical incidents. These alerts provide concise information about the issue, its severity, and the affected systems, allowing engineers to immediately start troubleshooting. The goal is to get the right people alerted quickly and efficiently.

Essential Metrics for Outage Prevention

A proactive approach to outage prevention relies on monitoring key performance indicators (KPIs). Regularly tracking these metrics helps identify potential issues before they cause significant disruptions.

- CPU Utilization: Sustained high CPU usage can indicate overloaded servers.

- Memory Usage: Memory leaks or insufficient memory allocation can lead to application crashes.

- Disk I/O: High disk I/O could signal slow database queries or insufficient storage.

- Network Traffic: Unusual spikes or drops in network traffic might point to connectivity problems.

- Application Response Times: Slow response times indicate performance bottlenecks within the application itself.

- Error Rates: A sudden increase in error rates indicates a potential problem within the application or infrastructure.

- Database Performance: Slow database queries can significantly impact application performance.

- Log File Analysis: Regular analysis of log files can reveal subtle issues that might otherwise go unnoticed.

So, the next time you see that dreaded “Facebook is down” message, you’ll have a much deeper appreciation for the monumental task of keeping a global social network online. From the seemingly simple act of configuring a server to the complex interplay of hardware, software, and network infrastructure, every element plays a critical role. Understanding the intricacies of Facebook’s server configuration isn’t just about technical details; it’s about understanding the resilience required to manage a platform that touches billions of lives daily. The constant evolution of technology means the fight against outages is ongoing – a never-ending quest for improved server configurations and disaster recovery strategies.

Blockchain Essentials Berita Teknologi Terbaru

Blockchain Essentials Berita Teknologi Terbaru